76. Conjugate Gradients#

The gradient method is an improvement to the Richardson method since it does not need the spectral bounds explicitly. The Chebyshev method is an improvement to the Richardson method since the number of iterations are reduced from \((\log \varepsilon^{-1}) \, \kappa\) to \((\log \varepsilon^{-1}) \, \sqrt{\kappa}\).

The conjugate gradients method combines both advantages.

All four methods need one matrix-vector multiplication with the system matrix \(A\), and one preconditioner application \(C^{-1}\) per iteration, plus some vector updates. The gradient method and conjugate gradient method need inner products.

The idea behind the cg-method is to minimize the Ritz functional

over the Krylov-space

In every iteration we increase the space to minimize, and compute the new minimizer. This can be done efficiently by computing an orthogonal basis for \({\mathcal K}^n\).

Since every polynomial iteration (such as the Chebyshev method) produces iterates \(x^n \in {\mathcal K}^n\), we obtain error estimates for the cg-method immediately from the Chebyshev method.

76.1. Solving the minimization problem#

Assume an \(n\)-dimensional sub-space \(V^n \subset {\mathbb R}^N\) is spanned by basis vectors \(p_1, \ldots, p_n\). Let \(P \in {\mathbb R}^{N \times n}\) contain the basis vectors \(p^k\) as column vectors. We want to solve the minimization problem

Since every \(x \in V^n\) can be represented as \(x = P y\) with \(y \in {\mathbb R}^n\), the minimization problem can be rewritten as:

The solution is

which is obtained by solving a small, \(n \times n\)-dimensional linear system. The original solution \(x\) is

If the vectors \(p_i\) are \(A\)-orthogonal (also known as \(A\)-conjugate), i.e.

then the matrix \(P^T A P\) is diagonal, and the minimization problem can be solved very easily:

If we increase the space \(V^n\) to \(V^{n+1}\) by adding one more vector \(p_{i+1}\), orthogonal to all previous \(p_1, \ldots , p_n\), then the minimizer \(x_{n+1}\) on \(V^{n+1}\) can be updated cheaply from the minimizer \(x^n\) on \(V^n\):

76.2. Expanding the Krylov-space#

Assume we are given the Krylov space \({\mathcal K}^n\), and we have computed the minimizer \(x_n \in {\mathcal K}^n\).

The (negative) gradient of \(f(x) = \tfrac{1}{2} x^T A x - b^T x\) is

If \(r_n = 0\), the problem is solved and we stop.

Since \(r_n\) is \({\mathbb R}^N\)-orthogonal to \({\mathcal K}^n\), for

This is seen as \(\left< w_n, z \right>_C = \left< C w_n, z \right> = r_n^T z = 0\) for all \(z \in {\mathcal K}^n\).

Since \(x_n\) is in \({\mathcal K}^n\), and \(w_n\) is obtained by yet another applications of \(C^{-1}\) and \(A\), we get \(w_n \in {\mathcal K}^{n+1}\). Together with \(C\)-orthogonality we have

Finally, we construct an \(A\)-orthogonal basis by Gram-Schmidt orthogonalization:

Here the miracle of the cg-method pops up. The inner products in the numerator are

Since \(r^n \; \bot \; {\mathcal K}^n\), and \(C^{-1} A p_i \in {\mathcal K}^{i+1}\), this inner product vanishes for all \(i \leq n-1\). Only the last term in the sum remains:

In Saad, “Iterative Solvers”, the Preconditioned Conjugate Gradient method is Algorithm 9.1, page 277. The inner products are reformulated by recursions which have better stability with respect to roundoff errors.

Given \(x_0\)

\(r_0 = b - A x_0\)

\(p_0 = C^{-1} r_0\)

for \(k = 0, 1, 2, \ldots\)

\(\qquad \alpha_j = \frac{r_k^T w_k}{p_k^T A p_k}\)

\(\qquad x_{k+1} = x_k + \alpha_j p_k\)

\(\qquad r_{k+1} = r_k - \alpha_j A p_k\)

\(\qquad w_{k+1} = C^{-1} r_{k+1}\)

\(\qquad \beta_k = \frac{r_{k+1}^T w_{k+1}}{r_k^T w_k}\)

\(\qquad p_{k+1} = w_{k+1} + \beta_k p_k\)

def CG(A,b,pre, maxit=200, tol=1e-8, callback=None):

x = b.CreateVector()

w = b.CreateVector()

r = b.CreateVector()

p = b.CreateVector()

ap = b.CreateVector()

r.data = b

p.data = pre*r

wrn = InnerProduct(r,p)

err0 = sqrt(wrn)

x[:] = 0

for it in range(maxit):

ap.data = A * p

pap = InnerProduct(p, ap)

wr = wrn

alpha = wr / pap

x.data += alpha * p

r.data -= alpha * ap

w.data = pre*r

wrn = InnerProduct(w, r)

err = sqrt(wrn)

if callback: callback(it,err)

if err < tol * err0: break

beta = wrn / wr

p *= beta

p.data += w

return x

from ngsolve import *

mesh = Mesh(unit_square.GenerateMesh(maxh=0.1))

fes = H1(mesh, order=1)

u,v = fes.TnT()

a = BilinearForm(grad(u)*grad(v)*dx+10*u*v*dx).Assemble()

f = LinearForm(x*y*v*dx).Assemble()

gfu = GridFunction(fes)

pre = a.mat.CreateSmoother(GS=False)

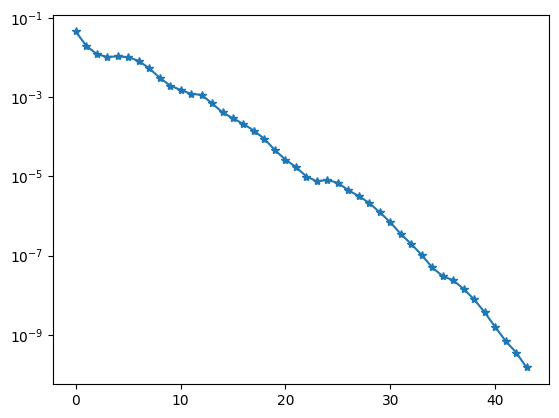

hist=[]

gfu.vec.data = CG(a.mat, f.vec, pre,

callback=lambda it,err: hist.append(err))

import matplotlib.pyplot as plt

plt.yscale('log')

plt.plot(hist, '-*');

from ngsolve.webgui import Draw

Draw (gfu);